Uploading and Downloading Files to/from Amazon S3 using Boto3

Introduction:

Amazon Simple Storage Service (S3) is a scalable object storage service that allows you to store and retrieve any amount of data. In this tutorial, we will guide you through the process of uploading and downloading files to/from an S3 bucket using the Boto3 library in Python.

Prerequisites:

- An AWS account with S3 access.

- AWS access key ID and secret access key.

- Python installed on your machine.

- Boto3 library installed (

pip install boto3).

Step 1: Set up AWS Credentials:

Ensure that you have your AWS access key ID and secret access key ready. You can find or create these credentials in the AWS Management Console under "My Security Credentials."

If you're unfamiliar with the steps above, I highly recommend checking out this article

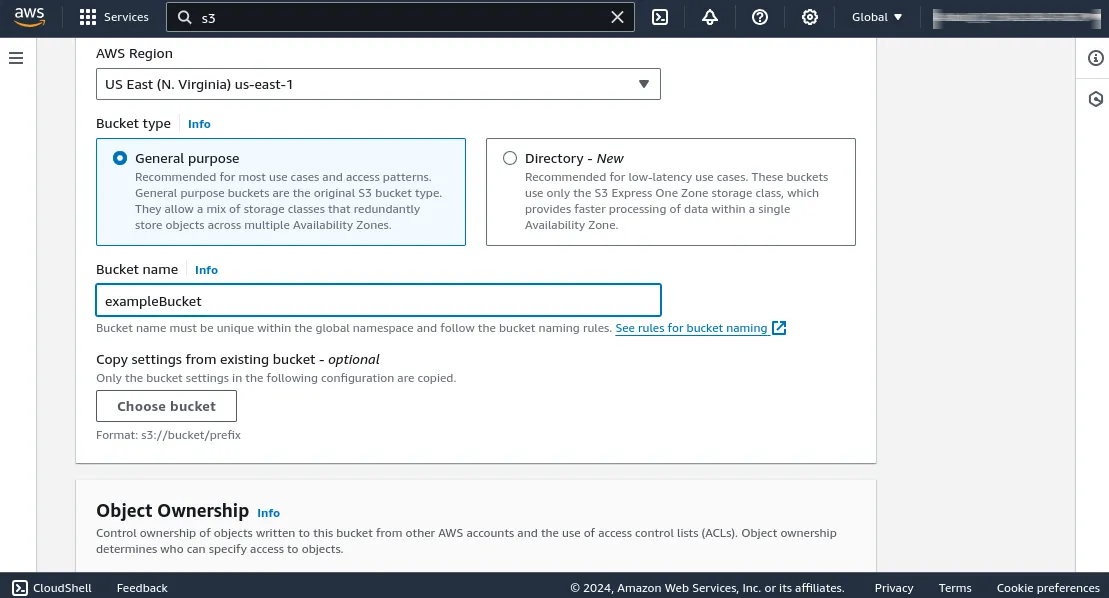

Step 2: Create an S3 Bucket:

- Log in to the AWS Management Console.

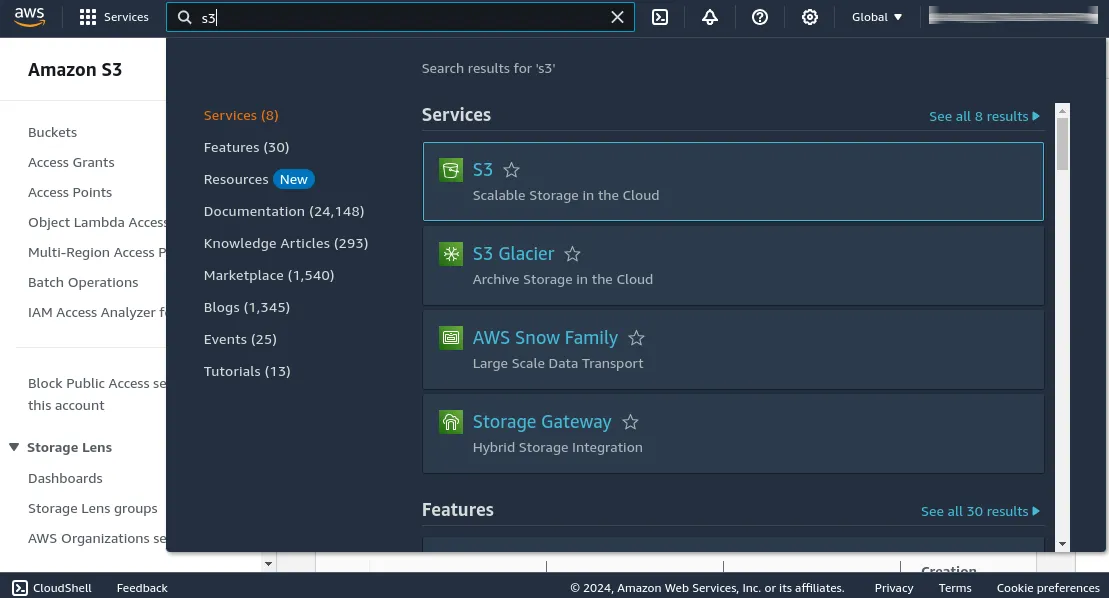

- Navigate to the S3 service.

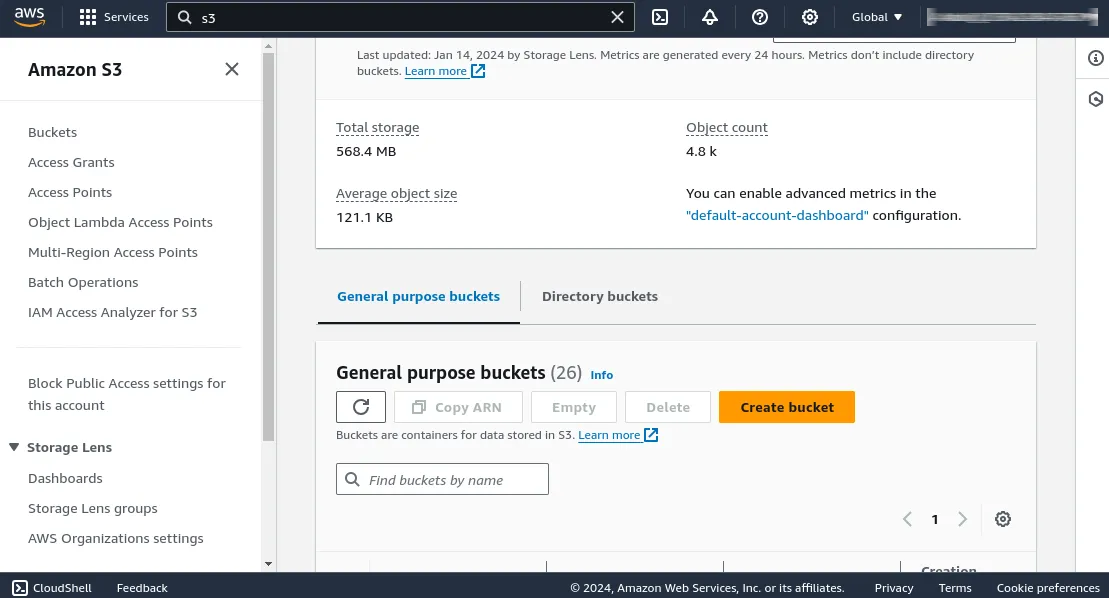

- Click "Create bucket."

- Enter a unique bucket name and choose a region.

- Configure additional settings if needed and click "Create bucket."

Uploading a File to S3:

import boto3

# Replace 'your-access-key-id' and 'your-secret-access-key' with your AWS credentials

aws_access_key_id = 'your-access-key-id'

aws_secret_access_key = 'your-secret-access-key'

# Replace 'your-bucket-name' and 'your-object-key' with the S3 bucket and object key

bucket_name = 'your-bucket-name'

object_key = 'your-object-key'

local_file_path = 'path/to/your/local/file'

# Create an S3 client

s3 = boto3.client('s3', aws_access_key_id=aws_access_key_id, aws_secret_access_key=aws_secret_access_key)

# Upload the file

s3.upload_file(local_file_path, bucket_name, object_key)

print(f'File "{local_file_path}" uploaded to S3 bucket "{bucket_name}" with key "{object_key}"')Explanation:

- Replace the placeholder values with your actual AWS credentials, S3 bucket information, and file paths.

- This script uses the Boto3

upload_filemethod to upload a file to the specified S3 bucket.

For best practices, you should set both secrets in an environmental variable, And retrieve them like this:

import os

# Retrieve the value of an environment variable

aws_access_key_id = os.environ.get('VARIABLE_NAME')

aws_secret_access_key = os.environ.get('VARIABLE_NAME')Additionally, If you already have a profile setup in your AWS CLI, we can get rid of the aws_access_key_id and the aws_secret_access_key as boto3 will automatically start the session with your default profile.

This process might be unreasonable in a Devops workflow.

Hence our code witll be reduced to this:

# Replace 'your-bucket-name' and 'your-object-key' with the S3 bucket and object key

bucket_name = 'your-bucket-name'

object_key = 'your-object-key'

local_file_path = 'path/to/your/local/file.txt'

# Create an S3 client

s3 = boto3.client('s3')

# Upload the file

s3.upload_file(local_file_path, bucket_name, object_key)

print(f'File "{local_file_path}" uploaded to S3 bucket "{bucket_name}" with key "{object_key}"')Downloading a File from S3:

# Replace 'your-bucket-name' and 'your-object-key' with the S3 bucket and object key

local_download_path = 'path/to/your/local/downloaded/file'

# Download the file

s3.download_file(bucket_name, object_key, local_download_path)

print(f'File downloaded from S3 bucket "{bucket_name}" with key "{object_key}" to local path "{local_download_path}"')Explanation:

- Replace the placeholder values with your actual S3 bucket information and local file path.

- This script uses the Boto3

download_filemethod to download a file from the specified S3 bucket.

Conclusion

Congratulations! You have successfully uploaded and downloaded files to/from Amazon S3 using Boto3 in Python. This tutorial covered setting up AWS credentials, creating an S3 bucket, and provided code snippets for file upload and download.

Continue Reading

Top Cloud Services providers with CloudPlexo's Innovative Solutions